Pre-installed Computer Vision Software

Contains the OpenVINO(TM) toolkit for hardware acceleration of deep learning inference for computer vision applications.

Hardware Acceleration

Harness the performance of Intel®-based accelerators for deep learning inference with the CPU, GPU, and VPU included in this kit.

Reduce Time to Field Trial

The kit includes a field-ready mountable aluminum enclosure and camera. Purchase available Wi-Fi and cellular module add-ons.

Benefits of the OpenVINO™ toolkit

Accelerate Performance

Access Intel computer vision accelerators. Speed code performance.

Supports heterogeneous processing & Asynchronous execution.

Integrate Deep learning

Unleash convolutional neural network (CNN) based deep learning inference across using a common API & 10 trained models.

Speed development

Reduce time using a library of optimized OpenCV* & OpenVX* functions, 15+ samples.

Develop once, deploy for current & future Intel-based devices.

Innovate & customize

Use the increasing repository of OpenCL™ starting points in OpenCV* to add your own unique code.

UP Squared AI Vision X Developer kit

| What's in the box |

| A VESA Mountable edge computer with Intel Atom®X7-E3950 processor, 8GB memory, 64 GB eMMC with Ubuntu image (kernel 4.15) OpenVINO™ toolkit R5 |

| Power supply 5V @ 6A |

| EU/US Power cord |

| AI Core X integrated with Intel® Movidius™ Myriad™ X (version B) |

| USB Camera with maximum resolution of 1920 x 1080p at 30 fps |

| Software |

| Ubuntu 16.04 Desktop |

| OpenVINO™ toolkit R5 |

| Intel® System Studio 2018 Community Edition with Eclipse IDE |

| Drivers for Intel® VTune™ Amplifier, Intel® Energy Profiler, Intel® Graphics Performance Analyzer |

| MRAA and UPM I/O and sensor libraries for C++, Python, Java and JavaScript |

USB Vision camera

| Maximum resolution | Full HD 1920 x 1080 |

| Supported video quality | 320 x 240 / 352 x 288 / 640 x 480 VGA / 800 x 600 MJPEG @ 60 fps 1024 x 768 / 1280 x 720 / 1280 x 1024 / 1920 x 1080 MJPEG @ 30 fps |

| Picture format | MJPEG / YUV2 (YUYV) |

| Focus motor | Manual focus |

| Sensor | 1/2.7" OV2735 |

| Mini illumination | 0.05 LUX |

| Interface | USB 2.0 High Speed |

| Lens | F3.6mm |

| Support OTG | USB 2.0 OTG |

| Support free driver | USB Video Class (UVC) |

| Dimensions | 41 x 41 x 41.5 mm / 245g |

| Exposure | Auto |

| Auto white balance | Auto |

| AGC | Support |

| Working temperature | -20°C to 80°C |

| Operating humidity | 30%~90% Rh |

| Working power | DC 5V |

| Cable length | 1 meter |

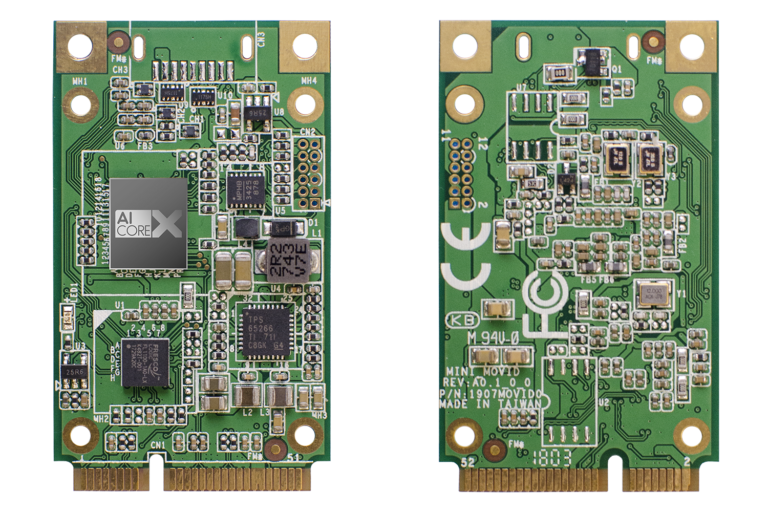

AI Core X Specifications

| UP AI Core X | |

|---|---|

| SoC | Intel® Movidius™ Myriad™ X VPU 2485 |

| Quantity of VPU | 1 |

| Form factor | Mini PCI-Express |

| Memory | 4Gb LPDDR4 x1 |

| Dimensions | 30 x 51 mm (1.18" x 2.00") |

| Thermal | Fanless heatsink |

| Supported Frameworks | Caffe, TensorFlow, MXNet, Kaldi, ONNX |

| Minimum system requirements | x86_64 Computer running Ubuntu 16.04, 1GB memory, 4GB Free storage, vacant expansion slot |

| Software tool | Intel® Distribution of OpenVINO™ toolkit R4 or above rev. |

UP Squared Specifications

| Soc | Intel® Atom™ x7-E3950 (up to 2GHz) |

| Graphics | Intel® Gen 9 HD |

| Video and Audio | 1x HDMI 1.4b 4K@30Hz, 1x DP 1.2 4K @ 60Hz |

| Camera interface | CSI 2-lane + CSI 4-lane (only pass-through) |

| Memory | 8GB |

| Storage | 64GB |

| USB | 3x USB3.0 (Type A) 1x USB 3.0 OTG (micro B) 2x USB2.0 + 2x UART (Tx/Rx) |

| Ethernet | 2x Gb Ethernet full speed RJ-45 |

| RTC | Yes |

| Expansion | 40 pin General Purpose bus 60 pin EXHAT 1x mini-PCI-e, M.2 2230, SATA3 |

| OS Support | MS Windows 10 (full) MS Windows IoT Core Linux (ubilinux, Ubuntu, Yocto) Android Marshmallow |

| Dimensions | 85.6 x 90 mm |

Expand your UP Squared board with a wide range of connectivity expansions